Azure Storage is Microsoft’s cloud storage option for use in modern data storage scenarios. Azure Storage offers highly available, massively scalable, dependable, and secure cloud storage for a variety of data objects. Anywhere in the world can access data items in Azure Storage using a REST API over HTTP or HTTPS. For developers building apps or services with.NET Java, Python, JavaScript, C++, or Go, Azure Storage also offers client libraries. Developers and IT professionals can write scripts in Azure PowerShell and Azure CLI to create data management or configuration jobs. Using the Azure portal and Azure Storage Explorer, users can communicate with Azure Storage.

Azure Storage’s Benefits

For programmers and IT professionals, Azure Storage services offer the following benefits:

Strong and Widely Accessible: Redundancy ensures that your data is secure even in the event of brief hardware outages. You may decide to replicate data across data centers or geographical areas for added protection against regional or natural disasters. This kind of data duplication ensures that even in the event of an interruption, the data is still easily accessible.

Secure: Each piece of data written to an Azure storage account is encrypted by the service. You have complete control over who has access to your data thanks to Azure Storage.

Scalable: Azure Storage is designed to be incredibly scalable in order to meet the data storage and performance needs of contemporary applications.

Managed: Azure handles critical problems, updates, and maintenance on your behalf.

Accessible: HTTP or HTTPS can be used to access data saved in Azure Storage from anywhere in the world. Microsoft provides client libraries for Azure Storage in a number of languages, including .NET, Java, Node.js, Python, PHP, Ruby, Go, and others, in addition to an established REST API. Azure Storage supports scripting via Azure PowerShell or Azure CLI. Additionally, the Azure website and Azure Storage Explorer make it simple for you to interact graphically with your data.

Azure Blob Storage and Its Features

Azure Blob Storage is the name of Microsoft’s cloud-based object storage product. Blob storage performs best when used for large-scale unstructured data storage. Unstructured data is data that doesn’t adhere to a predefined data model or standard, such as text or binary data.

Microsoft Azure Blob Storage features –

Access to Unstructured Data and Scalable Storage

Azure Blob Storage allows you to build data lakes to suit your analytics requirements, and it also provides storage so you can build reliable mobile and cloud-native applications. To save money on long-term data storage and to dynamically increase workloads demanding high-performance computing and machine learning, use tiered storage.

Develop dependable cloud-native applications

Blob storage was created from the bottom up to satisfy the demands of cloud-native, online, and mobile application developers in terms of scalability, security, and availability. Use it as the basis for serverless solutions like Azure Functions. The only cloud storage option that provides a premium, SSD-based object storage layer for interactive and low-latency applications is blob storage. The most popular programming frameworks, including as Java,.NET, Python, and Node.js, are also supported by blob storage.

Store petabytes of data effectively

You can cost-effectively store vast amounts of rarely or sporadically accessed data using several storage layers and automated lifecycle management. To avoid worrying about the transition between hardware generations, for instance, utilize Blob storage rather than tape archives.

Build solid data lakes

A highly scalable and affordable data lake solution for big data analytics is Azure Data Lake Storage. By combining the power of a high-performance file system with massive capacity and efficiency, it aids in accelerating your time to insight. Azure Blob Storage’s capabilities are increased by Data Lake Storage, which is built for analytics applications.

Scale up for HPC or out for IoT devices in the billions

Blob storage has the capacity needed to accommodate both the demanding, high-throughput demands of HPC applications and the billions of data points flowing in from IoT endpoints.

Azure Files

You can build highly available network file sharing with Azure Files by utilizing the industry-standard Server Message Block (SMB), Network File System (NFS), and Azure Files REST APIs. The same files can thus be accessed by many VMs both read-only and with write access. The storage client libraries or the REST interface can also be used to read files.

One way that Azure Files differ from files on a corporate file share is that you can access the files from anywhere in the world using a URL that links to the file and contains a shared access signature (SAS) token. Additionally, you can produce SAS tokens, which provide limited access to a private asset for a certain time.

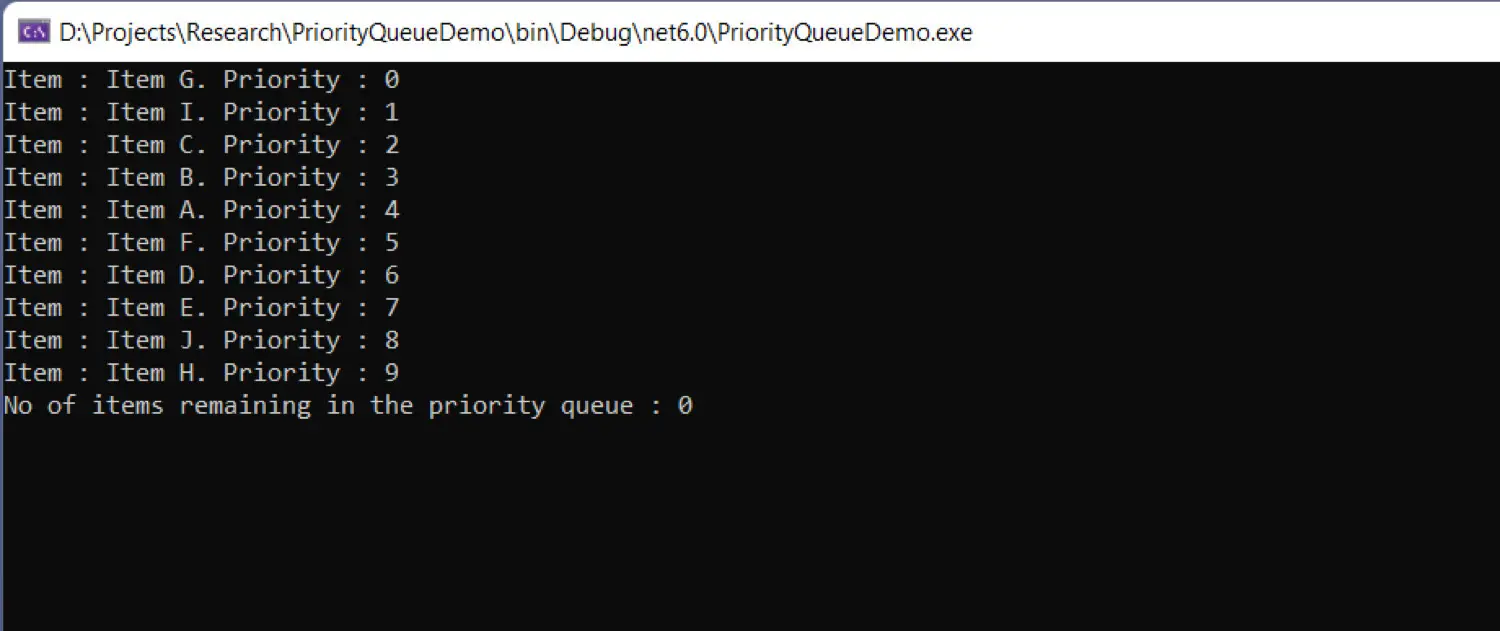

Queue Storage

The Azure Queue service is used to store and retrieve messages. Millions of messages can be stored in a queue, and each message can be up to 64 KB in size. Additionally, queues are frequently used to store asynchronously processed message lists.

If you want to create thumbnails for every image that a customer uploads and you want them to be able to, imagine the following scenario. You could request that your client wait while you create the thumbnails and submit the images. An alternative would be to use a line. When the client is done with their upload, type a message to the queue. Create the thumbnails next, and then have an Azure Function fetch the message from the queue.

A number of storage-related tools and services are offered by Azure. In the Azure Cloud Adoption Framework, under Review your storage options, you may find out which Azure technology is suitable for your situation.